I am a staff software engineer at Google, developing distributed software systems for

data-center and WAN networks, as a member of

NetInfra team.

Before that, I was a graduate student at Stanford

University, where I received a Ph.D. degree in Electrical Engineering, and a

Ph.D. minor degree in Computer Science, in 2017. My interests include cloud computing,

distributed systems, and networking systems. At Stanford, I worked with

Professor Philip Levis as a member of Stanford Information Networks Group

(SING). I received my M.S.

degree from Stanford University in 2013, and B.S. degree from Sharif University

of Technology in 2011, both in Electrical Engineering.

Projects

Main Research Projects

Nimbus

Nimbus is a general purpose cloud computing system specially

designed for computations with short tasks. Nimbus is developed in

C++ and the API offers a data model similar to

Spark and

Naiad.

The key difference between Nimbus and its counterparts is the novel

control plane abstraction called Execution

Templates. For jobs with

short tasks the runtime overhead becomes comparable to the task

itself. For example for an application with 4ms tasks, Sparks

runtime overhead is about 98%. Execution templates enable Nimbus to

schedule and drive jobs with tasks as short as 100us over large

number of workers at scale with negligible runtime overhead. For

more information visit Nimbus home page.

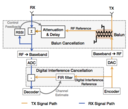

Janus

In this project, we investigate novel techniques to design MAC

protocol for the Full Duplex radio. This project follows SING’s

earlier paper on single channel full duplex wireless network (please

see here), which explores feasible

implementation of the physical layer for efficient simultaneous

transmission and reception. We consider the very fundamental

characteristics of a full duplex network and try to design a MAC

protocol which perfectly takes the advantages of the freedom

provided by the physical layer. Specially, the goal is to improve

the throughput as much as two times of the ordinary half duplex

systems while remaining fair to all the users. You can find our

paper here.

Older Research Projects

Overloaded Codes

As my undergraduate research thesis, I worked on developing

overloaded codes with efficient decoders for CDMA systems. These

codes not only have really simple decoder but also show the

bit-error-rate (BER) performance almost as good as the

maximum-likelihood (ML) decoder. The novel coding matrices are

called logical signature matrices (LSM), which benefit from

comparison-based decoders. In addition to the published

paper,

this work also received a US patent.

Selected Short Projects

x86 Runtime Prediction

Predicting x86 program runtime for Intel processor. As a part of

the machine learning course at Stanford (CS229), we

developed a series of supervised learning methods for predicting

the runtime length of serialized x86 programs for Intel

processors. Our generative model predicts runtime for programs

with arbitrary numbers of instructions with 12% error.

Packet Classification

Novel and optimized methods for packet classification when both

rules and keys contain wildcard expressions. This work introduces

a software based algorithm which is memory efficient, does not

need expansion for rules and keys containing wildcards, is

scalable, and provides multi-match results.

DCell with OpenFlow

Simulating DCell topology and routing for data centers using

Mininet and OpenFlow controller. Each year, advanced networking

course at Stanford (CS244) publishes a blog on reproducing the results reported in

the literature by students. Yo can find our report here .

Cognitive Radio Devices

This work is a literature survey on cognitive radio devices as a

part of wireless networking course at Stanford (EE359).

Publications

Dissertation

Today, cloud computing frameworks adopt one of two strategies to

schedule their computations over hundreds to thousands of machines. In

the centralized strategy, a controller dispatches computation units,

called tasks, to worker nodes. Centralization allows a framework to

quickly reschedule, respond to faults, and mitigate stragglers.

However, a centralized controller can only schedule a few thousand

tasks per second and becomes a bottleneck. In the distributed

strategy, there is no central node. These systems install data flow

graphs on each node, which then independently execute and exchange

data. By distributing the control plane and turning it into data flow,

these frameworks can execute hundreds of thousands of tasks per

second, and do not have a control plane bottleneck. However, data flow

graphs describe a static schedule; even small changes, such as

migrating a task between two nodes, requires stopping all nodes,

recompiling the flow graph and reinstalling it on every node. This

leads to high latency or wasteful resource utilization for

rescheduling, fault recovery, or straggler mitigation.

This dissertation presents a new, third strategy, called execution

templates. Execution templates leverage a program’s repetitive

control flow to cache control plane decisions in templates. A template

is a parametrisable block of tasks: it caches some information (e.g.,

task dependencies) but instantiation requires some parameters (e.g.,

task identifiers). Executing the cached tasks in a template requires

sending a single message that loads the new parameters. Large-scale

scheduling changes install new templates, while small changes apply

edits to existing templates. Execution templates are not bound to a

static control flow and efficiently capture nested loops and data

dependent branches. Evaluation of execution templates in Nimbus, a

cloud computing framework, shows that they provide the fine-grained

scheduling flexibility of centralized control planes while matching

the performance of the distributed ones. Execution templates in Nimbus

support not only the traditional data analytics, but also complex,

scientific applications such as hybrid graphical simulations.

Papers

-

Jupiter Evolving: Transforming Google's Datacenter Network via Optical Circuit Switches and Software-Defined Networking

-

Leon Poutievski, Omid Mashayekhi, Joon Ong, Arjun Singh,

Mukarram Tariq, Rui Wang, Jianan Zhang, Virginia Beauregard,

Patrick Conner, Steve Gribble, Rishi Kapoor, Stephen Kratzer,

Nanfang Li, Hong Liu, Karthik Nagaraj, Jason Ornstein, Samir

Sawhney, Ryohei Urata, Lorenzo Vicisano, Kevin Yasumura,

Shidong Zhang, Junlan Zhou, Amin Vahdat

-

In Proceedings of ACM SIGCOMM 2022

-

Abstract

We present a decade of evolution and production experience with

Jupiter datacenter network fabrics. In this period Jupiter has

delivered 5x higher speed and capacity, 30% reduction in capex, 41%

reduction in power, incremental deployment and technology refresh

all while serving live production traffic. A key enabler for these

improvements is evolving Jupiter from a Clos to a direct-connect

topology among the machine aggregation blocks. Critical

architectural changes for this include: A datacenter

interconnection layer employing Micro-ElectroMechanical Systems

(MEMS) based Optical Circuit Switches (OCSes) to enable dynamic

topology reconfiguration, centralized Software-Defined Networking

(SDN) control for traffic engineering, and automated network

operations for incremental capacity delivery and topology

engineering. We show that the combination of traffic and topology

engineering on direct-connect fabrics achieves similar throughput

as Clos fabrics for our production traffic patterns. We also

optimize for path lengths: 60% of the traffic takes direct path

from source to destination aggregation blocks, while the remaining

transits one additional block, achieving an average blocklevel path

length of 1.4 in our fleet today. OCS also achieves 3x faster

fabric reconfiguration compared to pre-evolution Clos fabrics that

used a patch panel based interconnect.

Distributing a simulation across many machines can drastically speed

up computations and increase detail. The computing cloud provides

tremendous computing resources, but weak service guarantees

force programs to manage significant system complexity: nodes,

networks, and storage occasionally perform poorly or fail.

We describe Nimbus, a system that automatically distributes

grid-based and hybrid simulations across cloud computing

nodes. The main simulation loop is sequential code

and launches

distributed computations across many cores. The simulation on each

core runs as if it is stand-alone: Nimbus automatically

stitches these simulations into a single, larger

one. To do this efficiently, Nimbus introduces a four-layer

data model that translates between the contiguous, geometric objects

used by simulation libraries and the replicated, fine-grained objects

managed by its underlying cloud computing runtime.

Using PhysBAM particle level set fluid simulations, we

demonstrate that Nimbus can run higher detail simulations faster,

distribute simulations on up to 512 cores, and run enormous

simulations (10243 cells). Nimbus automatically manages

these distributed simulations, balancing load across nodes and recovering

from failures. Implementations of PhysBAM water and smoke simulations

as well as an open source heat-diffusion simulation show that Nimbus is

general and can support complex simulations.

Nimbus can be downloaded from https://nimbus.stanford.edu.

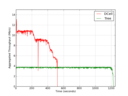

Control planes of cloud frameworks trade off between scheduling

granularity and performance. Centralized systems schedule at task

granularity, but only schedule a few thousand tasks per second.

Distributed systems schedule hundreds of thousands of tasks per

second but changing the schedule is costly.

We present execution templates, a control plane abstraction that can

schedule hundreds of thousands of tasks per second while supporting

fine-grained, per-task scheduling decisions. Execution templates

leverage a program’s repetitive control flow to cache blocks of

frequently-executed tasks. Executing a task in a template requires

sending a single message. Large-scale scheduling changes install new

templates, while small changes apply edits to existing templates.

Evaluations of execution templates in Nimbus, a data analytics

framework, find that they provide the fine-grained scheduling

flexibility of centralized control planes while matching the strong

scaling of distributed ones. Execution templates support complex,

real-world applications, such as a fluid simulation with a triply

nested loop and data dependent branches.

Existing cloud computing control planes do not scale to more than

a few hundred cores, while frameworks without control planes

scale but take seconds to reschedule a job. We propose an asynchronous

control plane for cloud computing systems, in which

a central controller can dynamically reschedule jobs but worker

nodes never block on communication with the controller. By decoupling

control plane traffic from program control flow in this way,

an asynchronous control plane can scale to run millions of computations

per second while being able to reschedule computations

within milliseconds.

We show that an asynchronous control plane can match the

scalability and performance of TensorFlow and MPI-based programs

while rescheduling individual tasks in milliseconds. Scheduling

an individual task takes 1μs, such that a 1,152 core cluster can

schedule over 120 million tasks/second and this scales linearly with

the number of cores. The ability to schedule huge numbers of tasks

allows jobs to be divided into very large numbers of tiny tasks,

whose improved load balancing can speed up computations 2.1-

2.3×.

This paper presents Janus, a novel MAC protocol for

full–duplex wireless networks. Unlike other full-duplex

MACs, Janus allows partially interfering nodes to cooperate

by finding the appropriate transmission rates based on

interference levels, making better use of the channel. Computing

the optimal schedule and transmission rates is NPComplete,

so Janus uses a cheaper heuristic approach. Janus

also ensures that channel access time is shared fairly between

all nodes. Janus has lower per-packet overhead compared

to CSMA/CA because it eliminates random back-off

and lets nodes transmit multiple packets with a single set of

control packets. We show that for a setup with one access

point and three nodes, Janus achieves 2.5X the throughput

of half–duplex system based on CSMA/CA.

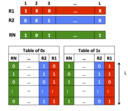

In this paper, we introduce a new class of signature matrices for

overloaded synchronous CDMA systems that have a very low complexity

decoder. While overloaded systems are more efficient from the

bandwidth point of view, the Maximum Likelihood (ML) implementation

for decoding is impractical even for moderate dimensions. Simulation

results show that the performance of the proposed decoder is very

close to that of the ML decoder. Indeed, the proposed decoding

scheme needs neither multiplication nor addition and requires only a

few comparisons . Furthermore, the computational complexity and the

probability of error vs. Signal to Noise Ratios (SNR) are derived

analytically.

Presentations

Technical Reports

Graphical simulations are a cornerstone of modern media and

films. But existing software packages are designed to run on HPC

nodes, and perform poorly in the computing cloud. These

simulations have complex data access patterns over complex data

structures, and mutate data arbitrarily, and so are a poor fit for

existing cloud computing systems. We describe a software

architecture for running graphical simulations in the cloud that

decouples control logic, computations and data exchanges. This

allows a central controller to balance load by redistributing

computations, and recover from failures. Evaluations show that the

architecture can run existing, state-of-the-art simulations in the

presence of stragglers and failures, thereby enabling this large

class of applications to use the computing cloud for the first

time.

Large scale cloud data analytics applications are often CPU

bound. Most of these cycles are wasted: benchmarks written in C++ run

10-51 times faster than frameworks such as Naiad and Spark. However,

calling faster implementations from those frameworks only sees

moderate (4-5x) speedups because their control planes cannot schedule

work fast enough.

This paper presents execution templates, a control plane abstraction

for CPU-bound cloud applications, such as machine learning. Execution

templates leverage highly repetitive control flow to cache scheduling

decisions as templates. Rather than reschedule hundreds of

thousands of tasks on every loop execution, nodes instantiate these

templates. A controller's template specifies the execution across all

worker nodes, which it partitions into per-worker templates. To ensure

that templates execute correctly, controllers dynamically patch

templates to match program control flow. We have implemented

execution templates in Nimbus, a C++ cloud computing framework.

Running in Nimbus, analytics benchmarks can run 16-43 times faster

than in Naiad and Spark. Nimbus's control plane can scale out to run

these faster benchmarks on up to 100 nodes (800 cores).

We present Canary, a scheduling architecture that allows

high performance analytics workloads to scale out to run

on thousands of cores. Canary is motivated by the observation

that a central scheduler is a bottleneck for high

performance codes: a handful of multicore workers can

execute tasks faster than a controller can schedule them.

The key insight in Canary is to reverse the responsibilities

between controllers and workers. Rather than

dispatch tasks to workers, which then fetch data as necessary,

in Canary the controller assigns data partitions to

workers, which then spawn and schedule tasks locally.

We evaluate three benchmark applications in Canary

on up to 64 servers and 1,152 cores on Amazon EC2.

Canary achieves up to 9−90× speedup over Spark and

up to 4× speedup over GraphX, a highly optimized graph

analytics engine. While current centralized schedulers

can schedule 2,500 tasks/second, each Canary worker can

schedule 136,000 tasks/second per core and experiments

show this scales out linearly, with 64 workers scheduling

over 120 million tasks per second, allowing Canary to

support optimized jobs running on thousands of cores.

Resume

Here are the links to my single-page and extended resumes.